PLEASE NOTE: If you had an account with the previous forum, it has been ported to the new Genetry website!

You will need to reset the password to access the new forum. Click Log In → Forgot Password → enter your username or forum email address → click Email Reset Link.

As the title says.

🐧

<img alt="windows GIF" data-ratio="56.50" height="140" style="background:rgb(0,255,153) none repeat scroll 0% 0%;" width="248" data-src="  &ct=g" src="/applications/core/interface/js/spacer.png" />

&ct=g" src="/applications/core/interface/js/spacer.png" />

<img alt="Windows Error GIF" data-ratio="100.00" height="248" style="background:rgb(0,255,153) none repeat scroll 0% 0%;" width="248" data-src="  &ct=g" src="/applications/core/interface/js/spacer.png" />

&ct=g" src="/applications/core/interface/js/spacer.png" />

Considering that the majority of all computer systems and SOC devices throughout the world, that use a base operating system, are running either a customized flavor of GNU-Linux or BSD, I would say that answers the question of which is better.

The only reason people still use windows is because of market share for desktop operating systems. People are used to windows, or they use it for work and can't be bothered to learn a whole new OS even if it would end up being better. Windows 10 isn't terrible once you spend the 1-2 hours it generally takes after a fresh install to get rid of all of the advertising, telemetry/tracking junk, risky/unneeded services and all the bloat that is not needed that M$ feels they need to shove down our throats.

Linux and BSD have the problem of not having enough adoption/market share on the desktop to force more software and hardware companies to release versions of their software and drivers for Linux and BSD.

If you have an android based device or a Chromebook, then you are using Google's customized version of Linux. If you are using an iOS device or OSX then you are using Crapple's customized version of a BSD based OS. Most home WIFI routers also run a stripped down flavor of Linux or BSD.

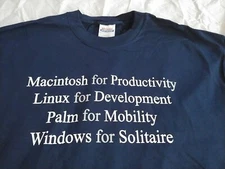

BTW this is tongue-in-cheek all in good fun...not to be taken seriously. I'm afraid Sean has underestimated the Linux extent of his fanbase though--I have a feeling this will be pretty hilarious 🤪😆

I know, I just figured I would give it a serious response more as food for thought.

<img alt="82 Best Microsoft Humor images | Humor, Microsoft, Funny" data-ratio="113.98" style="width:229.859px;height:262px;" width="236" data-src="  &f=1&nofb=1" src="/applications/core/interface/js/spacer.png" />

&f=1&nofb=1" src="/applications/core/interface/js/spacer.png" />

<img alt="Funny Mac Users Swear T-shirt makes everyone laugh" data-ratio="75.08" style="width:262px;height:262px;" width="999" data-src="  &f=1&nofb=1" src="/applications/core/interface/js/spacer.png" />

&f=1&nofb=1" src="/applications/core/interface/js/spacer.png" />

Everyone knows Windows is better. Is there a version of linux with gardening support? Nope. Windows NT has support for gardening built right in with its Dynamic Hose Configuration Protocol.

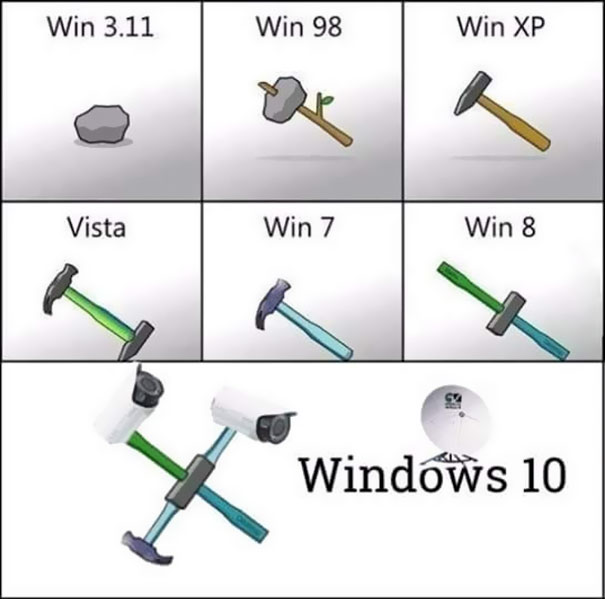

<img alt="Windows Throughout The Years" data-ratio="99.01" height="599" style="height:auto;" title="Windows Throughout The Years" width="605" data-src="  " src="/applications/core/interface/js/spacer.png" />

" src="/applications/core/interface/js/spacer.png" />

I second this! Last Windows OS I could tolerate (or even partially enjoy) was Win 7. After that, it's all downhill from there.

EDIT: I run Ubuntu 18.04 LTS x64 at the time of this post. Need to upgrade to 20.04 LTS...but don't feel like reinstalling everything just yet.

These are pretty spot-on (where I found the above picture too): https://www.boredpanda.com/funny-microsoft-windows-jokes/

6 hours ago, Sid Genetry Solar said:Last Windows OS I could tolerate (or even partially enjoy) was Win 7. After that, it's all downhill from there.

XP is where I got off the lolsoft ship. Windows ME seemed like peek awful, then the switch to the NT kernel really stabilized things for a while, only for Vista came along and consumed half the resources on your modern high end gaming rig... which btw can it run Crysis?

A lot of people who discover Linux evangelize hard, I used to, but don't anymore. Just tends to annoy others and cause me more work =). Some unfortunate news for <a contenteditable="false" data-ipshover="" data-ipshover-target="/profile/1-sean-genetry-solar/?do=hovercard" data-mentionid="1" href="/profile/1-sean-genetry-solar/" rel="">@Sean Genetry Solar , like it or not Microsoft is adopting a lot of Linux tech ( https://docs.microsoft.com/en-us/virtualization/windowscontainers/deploy-containers/linux-containers ) even into their core OS ( https://docs.microsoft.com/en-us/windows/wsl/install-win10 ) that professionals and businesses are demanding ( https://www.howtogeek.com/249966/how-to-install-and-use-the-linux-bash-shell-on-windows-10/ ) .

Also don't underestimate the effect of Apple switching to the M1 chip or NVIDIA buying ARM, its quite possible that consumer grade computing is on the verge of shifting architectures to ARM wholesale, then Windows will at least temporarily lose its greatest advantage- its immense historical software library. Windows as you know it has only run on a handful of architectures in its history whereas Linux has and continues to run on most prolific architectures, even some obscure ones, it is definitely better poised to compete on ARM than Windows is.

Its really kind of silly to compare Windows to Linux... One is a kernel, the other is kernel + userspace + UI + etc... Microsoft could even get out of the game of OSes altogether, opting to ship a "Windows" running the Linux kernel with their own UI on top, and instead contribute to WINE. I'm surprised it doesn't occur to many that WINE could eventually surpass the official Win32/Win64 API implementation entirely, in fact it has in some ways already (like backwards compatibility).

<img class="ipsImage ipsImage_thumbnailed" data-fileid="367" data-ratio="62.40" width="625" alt="inevitability.jpg.c512bba9833bf92c8e12199e809f3ff0.jpg" data-src="/monthly_2021_06/inevitability.jpg.c512bba9833bf92c8e12199e809f3ff0.jpg" src="/applications/core/interface/js/spacer.png" />

1 hour ago, kazetsukai said:Also don't underestimate the effect of Apple switching to the M1 chip or NVIDIA buying ARM, its quite possible that consumer grade computing is on the verge of shifting architectures to ARM wholesale, then Windows will at least temporarily lose its greatest advantage- its immense historical software library. Windows as you know it has only run on a handful of architectures in its history whereas Linux has and continues to run on most prolific architectures, even some obscure ones, it is definitely better poised to compete on ARM than Windows is.

I actually worry about the future of computing if we move away from full fat CPUs over to RISC and SMT platforms due to the limitations and bottlenecks it will cause down the road for those who actually use their computers for more than consuming content online and basic tasks that often lend themselves well to being parallelized. The single biggest reason that the M1 and ARM based chips looks so good on paper is that they are substantially simpler in design because so much has been taken away. They don't appear to be testing them apples to apples for all instruction sets that you have available to you on X86/64 based CPUs so they are only showcasing the things that they can run natively and all native tasks lend themselves well to heavy simultaneous multithreading. The minute you have to emulate or add a virtualization layer on top to run an instruction set that isn't supported you realize that they are not a replacement for X86/64 in any real way.

That being said, because they have a reduced set of instructions they are able to focus more on parallelization which is where pretty much all of the performance is coming from. With all of the parallelization they are also able to reduce the clock speed substantially to lower power consumption drastically, but that also means any task that can't be parallelized or requires many cycles will take far longer to complete.

Just imagine how fast the M1 could be if it was able to hit 5+Ghz on air but then it would still have the same limitations it has because of the architecture, plus added timing issues, and the power consumption would probably be on the order of 500+ watts due to the transistor count.

I actually worry about the future of computing if we move away from full fat CPUs over to RISC and SMT platforms due to the limitations and bottlenecks it will cause down the road for those who actually use their computers for more than consuming content online and basic tasks that often lend themselves well to being parallelized.

Performance and parallelization run hand-in-hand if you have lots of computation to do. There's little reason for instance, rendering pixels from a scene cannot be heavily parallelized. Or cryptography, or crunching data in big files. I guess the reasonable steel-man would be to ask what kind of tasks need to be done on general purpose computers that cannot be parallelized well, and why. Is it the task that cannot be parallelized, or is it the technique accomplishing the task that is unsuitable for parallelization?

The single biggest reason that the M1 and ARM based chips looks so good on paper is that they are substantially simpler in design because so much has been taken away. They don't appear to be testing them apples to apples for all instruction sets that you have available to you on X86/64 based CPUs so they are only showcasing the things that they can run natively and all native tasks lend themselves well to heavy simultaneous multithreading.

I'd argue the extensive number of special instructions illustrate a weakness in X86, not a strength. Lets say for argument's sake some specific ARM chip manufacturer added transistors to accelerate SSE2 or MMX extensions, its the same thing. The question is, for the mass majority of use cases, do you really need all of those extensions? Or did those extensions come from use cases where X86 fell short?

I must be misunderstanding you- Lets say you have some program written in C. Of course you need to compare the native compiled ARM code performance on the ARM chip to native compiled X86 code on X86 variants to get an apples-to-apples comparison, not how well the ARM chip will execute the kind of instructions present in the X86 binaries.

The minute you have to emulate or add a virtualization layer on top to run an instruction set that isn't supported you realize that they are not a replacement for X86/64 in any real way.

Again I must be missing something in your reasoning- Does this apply in reverse? Does X86 emulate / virtualize ARM instruction sets flawlessly at native speeds, cause I never got that memo.

That being said, because they have a reduced set of instructions they are able to focus more on parallelization which is where pretty much all of the performance is coming from. With all of the parallelization they are also able to reduce the clock speed substantially to lower power consumption drastically, but that also means any task that can't be parallelized or requires many cycles will take far longer to complete.

Does parallelization come from somewhere other than just more (redundancy) cores? I think the performance comes from IPC. In if one of those 5 billion cycles (5Ghz), you complete one instruction on ARM, but on an X86 chip it takes even just two cycles to complete the same instruction- the ARM chip is going to win by a theoretical 100% margin. I think this is the potential RISC offers- to complete instructions with less cycles than CISC architectures. If you need to do complex work, don't try to make it happen at the instruction set level- make your instruction set as simple, as cheap as possible in terms of cycles consumed and then leave the complex computations to the programmers and their code, where they can parallelize. Its not a functional difference as much as it is a philosophical difference.

Disclaimer: I'm no a chip/instruction set expert, I'm a Java programmer, don't even do memory management 😃

The original RISC concept was a rigid simple ISA specifically intended for high instruction throughput and easy parallelism resulting from the much simpler design of the execution unit. Instructions generally execute in a single clock cycle once all the data is available. Memory accesses are all aligned with the native bit width of the CPU, 32, 16, 64 bits etc. All the massive amount of silicon that current goes into parallelising and optimising (out of order execution and that ails it, hellllo spectre etc) the x86 ISA is not needed. Even the current ARM ISA is bloated by genuine RISC standards. The heavy lifting of code optimisation and parallelisation was moved to the compiler where it could evolve and develop without changes to the silicon.

Anything CISC can do RISC can do too, and vice-versa. The difference in performance comes down to the hardware and software engineers.

Hey now, I play M$ Solitaire on my Samsung G-10. And where's the OS/2 for banking?